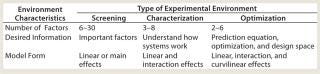

Global competition fueled by the power of information technology has forced the pharmaceutical and biotechnology industries to seek new ways to compete. The US Food and Drug Administration (FDA) has promoted quality by design (QbD) as an effective approach to speed up product and process development and create manufacturing processes that produce high-quality products that are safe and effective (1,2,3). Statistical design of experiments (DoE) is a tool that is central to QbD and the development of product and process “design space” (a combination of raw material and process variables that provide assurance that a quality product will be produced) (4). Much has been written about using DoE to create process design spaces. Here, I address factors that make it successful. Experimentation Strategy The first step in selecting a statistical design is creating a strategy for using DoE. You need a strategy based on a theory about experimentation. Experience over many decades in many different subject matters has identified some critical elements of an effective theory. First is the Pareto principle, which states that only a small number of the many variables that can have an effect actually do have major effects. Experience has shown that typically three to six variables have big effects. As Juran has emphasized, they become the vital few variables (5). The next important piece of the theory is that experimentation is sequential; you don’t have to collect all the data at once. A better strategy is to collect the right data in the right amount at the right time (6). The third element is to keep your focus on process and product understanding and summarize this knowledge and understanding in a process model that can be used to develop the design space as well as enable process control, another critical element of QbD. The process model is represented conceptually as Y = f (X), where Y is the process output variables predicted by the levels of X values (the process inputs and controlled variables). Another element of the theory is that the experimental environment determines experimental strategy and designs to be used. Diagnosis of this environment includes agreeing on goals and objectives, number, type, and ranges of the variables (X values) to be studied; desired information regarding the variables; resource constraints; and available scientific theory regarding the effects of the variables (7). Screening–Characterization–Optimization (SCO) Strategy: Table 1 summarizes an operational definition of the SCO theory of experimentation (7). This strategy identifies three experimental environments: screening, characterization, and optimization. Table 1 summarizes the desired information of those three phases. Table 1: Comparing experimental environments

Table 1: Comparing experimental environments ()

Yan and Le-he illustrate SCO in their work (8), where they describe a fermentation optimization study that uses screening followed by optimization. In their investigation, 12 process variables were optimized. The first experiment used a 16-run Plackett–Burman screening design to study the effects of those variables. The four variables with the largest effects were studied subsequently in a 16-run optimization experiment. The optimized conditions produced an enzyme activity that was 54% higher than the operations produced at the beginning of the experimentation. The screening phase takes advantage of the Pareto principle. It explores the effects of a large number of variables with the objective of identifying a smaller number of variables to study further in characterization or optimization experiments. Ensuring that all critically important variables are considered in the experimental program is a critical aspect of the screening phase (9). Additional screening experiments involving more factors may be needed when the results of the initial screening experiments are not promising. On several occasions I’ve seen the screening experiment solve the problem with no additional experimentation needed. When very little is known about a system being studied, sometimes “range finding “ experiments are used in which one candidate factors is varied at a time to get an idea what factor levels are appropriate to consider. Yes, varying one factor at a time can be useful. The characterization phase helps us better understand a system by estimating interactions as well as linear (main) effects. Your process model is thus expanded to quantify how variables interact with each other as well as to measure effects of the variables individually. The optimization phase develops a predictive system model that can be used to find useful operating conditions (design space) using response surface contour plots and perhaps mathematical optimization. It is important to recognize that those two tools are synergistic; one tool does not serve as a substitute for the other. Product and process robustness studies are also part of the optimization phase. The SCO strategy helps minimize the amount of data collected by recognizing the phases of experimentation. Using different phases of experimentation results in the total amount of experimentation being performed in “bites.” That bites allows subject matter expertise and judgment to be used more frequently and certainly at the end of each phase. SCO strategy embodies seven strategies (namely, SCO; screening– optimization; characterization–optimization; screening–characterization; screening; characterization; and optimization) developed from single and multiple combinations of the screening, characterization, and optimization phases. The end result of each sequence is a completed project. There is no guarantee of success in any given instance, only that SCO strategy will “raise your batting average” (7). Planning With a general strategy to guide your experimentation, you are in a position to plan your experiments. Several issues need to be addressed during this step. Defining the goals and objectives of your experiment is a very important activity because agreement among stakeholders regarding the objectives is critical to s uccess and frequently not present until after a discussion. It is also important to circulate a proposed design for comment and revise it based on input, to get the best thinking of the organization incorporated in the experimentation, and gain alignment among the stakeholders on the proposed design. Experimentation phase selection follows diagnosis of an experimental environment and determines the design(s) to be used. In one case I encountered in my work, a scientist was studying the effects of temperature and time on the performance of a formulation using three levels of each variable. Further discussion identified that the purpose of the study was to better understand the system by identifying effects and interactions of the variables. Optimization was not an objective, so only two levels of each variable needed to be studied. The result of recognizing “characterization” as the experimental environment lessened the amount of needed experiment by >50% — a significant reduction in time, personnel, and money that sped up study completion. Repeatability and reproducibility of a measurement system for measuring process outputs (Y values) must be assessed. More replicate testing will be needed when measurement quality is poor. It should not be overlooked that DoE can be used to improve the repeatability, reproducibility, and robustness of analytical methods (10). The amount and form of experimental replication must be addressed. When experimental reproducibility is good, single runs are sufficient. The law of diminishing returns is reached at about four runs per test condition. In one recent product formulation case, a scientist was having trouble creating the formulation because he didn’t recognize that high experimental variation was making it difficult for him to see the variables’ effects. He suspected that something was wrong because sometimes he ran an experimental combination once; other times there would be two, three, and even four repeat runs for the same factor combination. After several rounds of experiments, the effects of the variables were still unidentified. In a single experiment — which took the high experimental variation into account and used “hidden replication” characteristic of DoE — factorial designs produced a design space and optimal formulation that had 50% better quality than previously found. SCO strategy can also effectively address formulation optimization with an approach that is similar to that used for process variable optimization. The objective is to develop formulation understanding, identify ingredients that are most critical to formulation performance, and create formulation design space. One effective strategy is to use a screening experiment to identify the most critical ingredients and follow up with an optimization experiment to define formulation design space. That approach can effectively reduce the amount of experimentation and time needed to optimize a formulation by 30–50%. Environmental variables such as different bioreactors and other equipment, raw material lots, ambient temperature and humidity levels, and operating teams can affect results. Even the best strategy can be defeated if the effects of environmental variables are not properly taken into account. In one case, a laboratory was investigating the effects of upstream variables using two “identical” bioreactors. As an after-thought, both were involved in the same experiment. There was some concern that using both reactors would be a waste of time and resources because they were “identical.” However, data analysis showed a big difference between their results. Those differences were taken into account for future experiments. Special experimental strategies are also needed to reduce the effects of extraneous variables that creep in when an experimental program is conducted over a long time period. In one case, an experiment was designed using DoE procedures to study the effects of five upstream process variables. Data analysis produced confusing results and a poor fit of the process model to the data (low adjusted R2 values). Model residuals analysis showed that one or more variables not controlled during the experiment had changed during the study, thereby leading to poor model fit and confusing results. During an investigation, analysts realized that the experiment had been conducted over eight months. It is very difficult to hold an experimental environment constant over such a long time period. In each of those cases, it is appropriate to use “blocking” techniques when conducting experiments (11,12). Blocking accounts for the effects of extraneous factors such as raw material lots, bioreactors, and time trends. Experimentation is divided into blocks of runs in which experimental variation within a block is minimized. In the first case above, the blocking factor is the bioreactor; in the second case, the blocking factor is a time unit (e.g., months). The effects of those blocks are taken into account during data analysis, and effects of the variables being studied are not biased. Design Creation Now that you know the factors and levels to be studied and have selected the amount of replicate runs and repeat tests at each experimental combination to be made, blocking to be used (if needed), and randomization to be used, you are ready to construct the design. You can select one from a catalog of designs contained in electronic or hard-copy files or use software that selects a design based on optimization criteria. Computer optimization (e.g., JMP, www.jmp.com; Minitab, www.minitab.com; and Stat-Ease, www.statease.com statistical soft ware) is a useful tool for helping select a design. Be careful, however, in its use. Using soft ware is not a substitute for understanding and using good DoE strategy. Computer selection will always give you a design. The important question is whether the design obtained is best for your situation. METHOD FOR BUILDING PROCESS AND PRODUCT MODELS Get to know your data: Identify trends, patterns, and atypical values: Examine summary statistics: mean, standard deviation, minimum, maximum, and correlation matrix for variables. Plot the data, including scatter plots of the process outputs versus process variables and appropriate time plots. Formulate the model Y = f (X): Use trends identified in the plots and subject matter theory whenever possible to guide the selection of the model form. Fit the model to the data: Identify significant variables, assess the fit of the model using the adjusted R2 statistic, and check for any multicollinearity problems using variance inflation factors for the model variables Check the fit of the model: Construct plots of the residuals (observed minus predicted) to identify abnormal patterns or atypical data points that indicate that the model is inadequate. Report and use the model: Use it as appropriate, document model data and the construction process, and establish a procedure to continually check the performance of the model over time. You should always beware of automatic procedures. If a process model you select for a situation is correct, then the design selected by computer optimization is likely to work. If the model is wrong, then the computer-generated design will have issues and be less than useful. The problem is that you never know whether the model you select to create a design is appropriate until you have completed the experiment and analyzed the resulting data (9). Further discussion of comp uter-aided design of experiments is contained in a separate article (11). Experiment Administration And Data Collection Administration of an experimental program is a vital step that is often overlooked. It must be carefully planned and executed. As John Wooden, arguably the most successful college basketball coach ever, has admonished us, “Failing to plan is planning to fail.” The first aspect of planning is to make sure that you have all the personnel, equipment, and materials needed to construct the experimental program. There should be no waiting around, which can be a big source of waste in R&D laboratories. An experiment should be randomized and conducted in the randomized order. If randomization isn’t done or runs are derandomized to make an experimental work more convenient, then a critical aspect of experimentation is ignored. Another important issue is finding out during testing that experimental combinations couldn’t be completed. To help prevent this, evaluate all runs in a design for operability. If some are suspect, then make those runs first to see whether they can be done. If not, consider changing their levels (e.g., move the run closer to the center of the design) or changing the range of critical variables and recreating the design. Several options will become clear in each situation. The critical point is to consider the situation up front and plan for how to deal with an eventuality should it occur. Data Analysis and Model Building Many points could be made here; I will address a few. First you need a process for conducting analysis such as that shown in the “Method for Building Process and Product Models” box (14). This roadmap gives you a plan to follow and points out key events that must happen. Next, you need to make effective use of graphics, a best practice for using statistical thinking and methods. Graphics work because of humans’ ability to see patterns in data that are too complex to be detected by statistical models. EFFECTIVE EXPERIMENTATION: TIPS AND TRAPS Define a clear objective for a program and each experiment. Adopt an end-to-end view of the needed experimentation:

Use an experimental strategy that involves several phases that are linked and sequenced.

Take prudent risks to collect only data that are needed at any particular point in time — what is needed, not what is nice to have.

Make a careful diagnosis of the experimental environment to determine what design should be used.

In the beginning, be bold but not reckless.

Get all critical factors under investigation. Understand how data will be analyzed before running an experiment. Emphasize good fundaments in all experimentation (randomization, replication, blocking, and so on). Study the variables over a wide, but realistic range: Be patient, some problems take several experiments to solve. Good administration of the experimentation process is critical:

Be sure that factor levels are set and data are collected as specified; prevent missed communications.

Test suspect combinations of factor levels first. If no problems are encountered, proceed with the rest of the design. Consider redesigning the experiments if problems are found.

Use analytical methods that produce high-quality measurements. Make effective use of graphics: Always plot data and draw schematics to provide context for experimentation Conduct confirmation runs after analysis to verify the model. No waiting is allowed: Have personnel, equipment, materials, information, and software available when they are needed. When a poor fit of a model is obtained (a low adjusted R2 value), it can be a result of several factors, including an important variable missing from the model, the wrong model being used (e.g., a linear model used when the response function is curved), and atypical values in the data (outliers). These issues are fairly well known. Poor measurement quality can be a source of poor fit of a model to the data. Your model may be correct and the fit have a low adjusted R2 value as a result of high measurement variation introduced by a poor measurement system. Residual analysis is another aspect of good statistical practice. A residual is the difference between the observed measurement and the value predicted by the model Y = f (X). Residual analysis provides much information (e.g., the presence of missing variables, outliers, and atypical values; and a need for curvature terms in a model). Confirmation Studies A fundamental of good experimental practice is completing confirmation experiments. This documents that the results and recommendation of your experiments can be duplicated and that the model you developed for the system accurately predicts response behavior. Confirmation experiments are best conducted in an environment where results will be used such as the manufacturing process instead of a laboratory. Findings, Conclusions, and Recommendations It is good practice to make oral presentations of findings and recommendations before writing a report (14). Present first to a small group of stakeholders to assess reaction and receptivity to your results, then present to broader audiences. You can improve your presentation using input you receive from various groups. Afterward, you are in a position to write a report (that will probably go unnoticed because your results will already be accepted and thus be old news). Presentation and written reports should contain graphics summarizing and communicating important findings. Graphs might include histograms, dot plots, main effects, two-factor interactions, and cube plots. A good graphic is understood by both presenter and user and is simple and easy to understand. There is elegance in simplicity. Be careful when reproducing computer outputs directly into presentations and reports. Most computer outputs contain more information than your audience needs. Reduce displays of computer output to only what is needed to communicate your message. It is acceptable to put more detailed computer output in an appendix, but you need to be careful to make sure that a reader will need all the information presented. Effective Use of DoE Creates A Competitive Advantage Using DoE enables effective use of QbD in creating product and process design spaces and process control strategies and supports risk management. Like any other tool, its elements, strengths, and limitations must be understood for DoE to be used effectively. The “Tips and Traps” box provides a list of factors that enable effective use of QbD. Those suggestions were developed over years of successful projects and were effective in a number of different situations. The promise of effective DoE is that the route of product and process development will speed up through more cost-effective experimentation, product improvement, and process optimization. Your “batting average” will increase, and you will develop a competitive advantage in the process.

About the Author

Author Details Ronald D. Snee, PhD, is founder and president of Snee Associates, LLC, a firm dedicated to the successful implementation of process and organizational improvement initiatives, 10 Creek Crossing, Neward, DE 19711, 1-610-213-5595, fax 1-302-369-9971; [email protected].

REFERENCES

1.) Snee, RD. 2009. Quality by Design — Four Years and Three Myths Later. Pharm. Process www.pharmpro.com/articles/2010/03/government-and-regulatory-Implementing-Quality-by-Design:14-16.

2.) Snee, RD. 2009. Building a Framework for Quality by Design. Pharm. Technol online http://pharmtech.findpharma.com/pharmtech/Special+Section%3a+Quality+by+Design/Building-a-Framework-for-Quality-by-Design/ArticleStandard/Article/detail/632988?ref=25.

3.) Snee, RD. 2010. Robust Strategies for Improving Upstream Productivity. BioPharm Int. 23:S28-S33.

4.) ICH Q8 2005.Harmonized Tripartite Guideline: Pharmaceutical Development, Current Step 4 Version. International Conference on Harmonisation of Technical Requirements for the Registration of Pharmaceuticals for Human Use, Geneva.

5.) Juran, JM. 1992.Juran on Quality by Design: The New Steps for Planning Quality into Goods and Services, The Free Press, New York.

6.) Lonardo, A, RD Snee, and B. Qi. 2010. Time Value of Information in Design of Downstream Purification Processes — Getting the Right Data in the Right Amount at the Right Time. BioPharm Intl. 23:23-28.

7.) Snee, RD. 2009. Raising Your Batting Average: Remember the Importance of Strategy in ExperimentationDecember. Quality Progress:64-68.

8.) Yan, L, and M. Le-he Optimization of Fermentation Conditions for P450 BM-3 Monooxygenase Production by Hybrid Design Methodology. J. Zhejian University Science B 8:27-32.

9.) Hulbert, MH. 2008. Risk Management in Pharmaceutical Product Development: White Paper Prepared by the PhRMA Drug Product Technology Group. J. Pharm. Innovation 3:227-248.

10.) Schweitzer, M. 2010. Implications and Opportunities of Applying QbD Principles to Analytical Measurements. Pharm. Technol. 33:52-59.

11.) Box, GEP, JS Hunter, and WG. Hunter. 2005.Statistics for Experimenters — Design, Innovation, and Discovery, Wiley-Interscience, New York.

12.) Montgomery, DA. 2005.Design and Analysis of Experiments7th Edition, John Wiley and Sons, New York.

13.) Snee, RD. 1985. Computer-Aided Design of Experiments: Some Practical Experiences. J. Quality Technol. 17:222-236.

14.) Hoerl, RW, and RD. Snee. 2002.Statistical Thinking: Improving Business Performance, Duxbury Press, Pacific Grove.