High-Throughput Process Development

April 1, 2008

Increasing pipelines, shorter timelines, talent scarcity, reduced budgets — all these are issues faced by companies working in today’s biotechnology environment. The ultimate goal of a process development team is to stay off the “critical path” to drug approval. But how do they complete the necessary work to create a robust manufacturing process in light of such pressures? To increase the effectiveness of development, many companies are turning to high-throughput technologies within their development platforms. Such technologies promise that scientists can access larger experimental spaces and thereby improve results of each individual experiment, both reducing the number of optimization iterations and increasing the productivity of each hour spent in a laboratory.

PRODUCT FOCUS: ALL BIOLOGICALS

PROCESS FOCUS: PRODUCTION PROCESS DEVELOPMENT

WHO SHOULD READ: PROCESS ENGINEERS, CELL CULTURE ENGINEERS

KEYWORDS: CELL-LINE DEVELOPMENT, CELL CULTURE OPTIMIZATION, MEDIUM OPTIMIZATION, PROCESS DEVELOPMENT

LEVEL: INTERMEDIATE

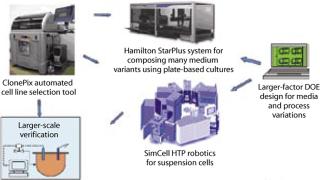

At Invitrogen, implementation of technologies for high-throughput process development is an area of significant investment for the PD-Direct Bioprocess Services team (www.invitrogen.com/pddirect). Our team brings together best-in-class technologies from across the industry to solve critical issues in bioproduction through serving clients. Our experience has revealed certain myths and truths in applying high-throughput technologies for process development (Figure 1).

Figure 1: ()

Myth: Plug-and-Play

Truth: Nothing substitutes for the first-hand experience of solving a real problem. This first myth–truth pairing is illustrated through our experience with implementing the ClonePixFL system from Genetix Ltd. (www.genetix.com). This system is based on selecting cell colonies that are fluorescently labeled within a semisolid matrix. Cell colonies are imaged, their fluorescence intensity is quantified, and the highly fluorescing colonies are “picked” using a robotic system. The Genetix platform yields significant efficiency gains through robotic picking of colonies based on a screening methodology instead of random, manual selection using more traditional limiting-dilution cloning. The ClonePix system is totally self-contained and can easily roll into a cell culture laboratory.

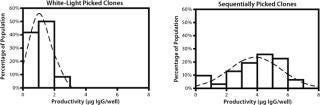

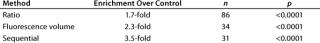

The importance of first-hand experience was revealed as we put a system into use within a rigorous pilot study, for which success criteria were set at a twofold improvement in productivity from a clonal culture. Using the packaged software, screening was initially based on the ratio of fluorescent to white-light volumes, which were believed to correlate with specific productivity. As we quickly learned, however, this screen was selecting for smaller colonies that proved to have poor viabilities in culture.

We then tried another filter available on the instrument to screen for the fluorescent volume of each colony based on a correlation to total protein secretion. This screen appeared to favor quickly growing colonies rather than those with high specific productivities. These experiences led the team to design a custom sequential screen in which colonies were first selected based on size and then on fluorescent volume, ultimately exceeding our success criteria with a 3.5-fold improvement in productivity (Figure 2). Establishing rigorous success criteria under a simulated project setting forced a more intimate understanding of how to use the tool and greatly enhanced our ability to deliver on the potential of the platform.

Figure 2: ()

Myth: It’s OK, the Instrument Designers Say It’ll Work

Truth: You are the only true expert in how you plan to use an instrument. This second myth–truth pairing comes from our experience with the SimCell platform from BioProcessors Corporation (www.bioprocessors.com). This technology platform is designed around microbioreactor chambers that simulate bioreactor conditions for mammalian cell cultures. The microbioreactors are manipulated using a robotic arm integrated within a system of incubators, microfluidics, detectors, and sampling/addition injectors.

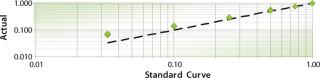

Our use of the SimCell system for media optimization makes use of its ability to accurately mix individual media components, which was well documented in the equipment specifications provided by the vendor. Given the large design-of-experiment (DOE) arrays we use for media optimization, we decided to confirm these results and include an extended qualification program simulating very complex mixing studies. In evaluation of dispensing accuracy from two pumps, we found very good correlation with expected media compositions and consistency with equipment specifications. However, when more complex studies required up to eight pumps, the accuracy significantly degraded at lower dispensing volumes (Figure 3). That observation would not have been predicted based on those simpler two-pump studies.

Figure 3: ()

After much troubleshooting, we found the source of the inaccuracy to be attributable to a cumulative dead-volume effect when multiple pumps were actuated. Identifying the gap between the instrument’s capabilities and our intended use of the instrument allowed Invitrogen and BioProcessors to work together and improve the system. Those changes greatly improved the accuracy of the system, and the team delivered a technology platform that meets the project needs. Had we not planned for the time and resources to prequalify the platform for its intended use, the dispensing issues could have had a detrimental

impact, increasing time and the total cost of the studies.

Myth: Installed and Tested = Good to Go!

Truth: Make sure you understand upstream and downstream workflows needed for success. As you begin to use a high-throughput technology, make sure you think about the workflows needed to run an instrument. This third myth–truth demonstrates how a powerful tool can be easily undermined by a lack of supporting processes.

One such tool for process development is the Hamilton StarPlus liquid handler system (www.hamiltoncompany.com). Our team uses this system for preparing complex media arrays used in media optimization programs. The system has 12 pipetting channels that can compose medium from up to 80 liquid concentrates and dispense it into specific locations within well plates or tubes. The StarPlus liquid handler serves as both a stand-alone platform and part of an integrated solution with the SimCell platform. Preparing materials and analyzing results from hundreds of related experimental combinations quickly becomes a daunting task. If it is not managed well, then the system becomes constrained by users’ ability to either set up experiments or measure their output. If properly integrated within a workflow, the technology allows users to power experimental designs otherwise out of reach.

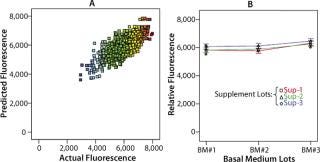

One example of how this technology can work within a well-designed workflow involves a project to analyze potential sources of variability in manufacturing a product kit involving three components: a basal medium, a nutrient supplement, and a cell growth stimulator. In total, we evaluated 30 media combinations in this study within 1,152 well locations using fluorescence analysis (Figure 4, Table 1). The study demonstrated that variability from each kit component was within acceptable limits for the kit. Such validation quality would have been impractical to achieve manually. Without the proper upstream systems for preparing stock reagents or downstream analytical tools to test the 1,000+ samples, this outcome would not have been achievable.

Figure 4: ()

Table 1: Summary table lists the coefficients of variation associated with each factor in ANOVA and the random error of the assay.

Table 1: Su mmary table lists the coefficients of variation associated with each factor in ANOVA and the random error of the assay. ()

Myth: We’ve Confirmed the Workflows, Now We’re Ready

Truth: Before starting, make sure you know which data you actually want and how you’re going to manage that information. Before you begin swimming in data, make sure that your experiments are planned out — not just what you want to test, but also how you’re going to process the information. What process will you use for mapping test results back to experimental conditions? How will you analyze the results? What is the objective of your study, and how will your data support it?

The siren song of high-throughput technology is that users can run many more conditions and collect much more data. Unfortunately, this power can lead to a lack of discipline in designing studies or a lack of clarity in sorting through data that may appear contradictory because some key parameter wasn’t properly controlled. In some ways, the limitation in experimental complexity of traditional methods can bring a clarifying influence to designing high-throughput studies. The critical success factor for proper application of high-throughput instrumentation is that the integrity of experimental structure must be maintained within a much larger parameter space.

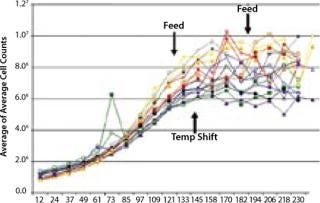

For example, a simple fed-batch study using the SimCell platform involving a four-factor, two-level full-factorial design included 16 parameter combinations and revealed conditions that generated a twofold improvement in titer (Figure 5). That level of experimental design is certainly within reach of traditional tools, but what do the results look like for the type of 10-factor experimental design conducted in a complex optimization project? Each factor increases the experimental space geometrically, so each graph can quickly turn into a book chapter. Deciphering the results requires a mastery of experimental design and data management.

Figure 5: ()

If careful planning goes into experimental design, including meaningful variations of relevant factors, then careful analysis of results may reveal unexpected gains in overall yield. For example, several incremental improvements revealed during analysis may combine into a substantial improvement that could not be readily detected by other means. Yet there is no substitution for experience in choosing the test conditions. Poor initial choices will limit the benefit of performing DOE analysis. Make sure to think your studies through to the end before starting them because once these instruments get started, you can generate a great deal of useless data very quickly.

The Promise of HTPD

In the end, do you get better results with larger experimental designs? The tools we integrated into our service platform revealed insights that leads us to this answer: It depends on what you need to know. Be wary of the appeal of using a tool because you have it, rather than because you need it.

We believe that the technologies described above represent best-in-class platforms that can increase the effectiveness of development processes. They have been shown to improve results if used appropriately. If you think one of these technology platforms may prove helpful in solving a problem you have encountered, our advice would be to first consider a collaboration with a company that has already made the investment of putting that technology into practice. You can learn from such a project. And if you decide to take the plunge into buying your own high-throughput system, I hope the four truths offered here will g

ive you a head start.

REFERENCES

1.) Langer, E 2004.Advances in Large-Scale Biopharmaceutical Manufacturing and Scale-Up ProductionSecond Edition, American Society for Microbiology and Institute for Science and Technology Management, Washington.

2.) Anderson, MJ, and PJ. Whitcomb. 2000.DOE Simplified: Practical Tools for Effective ExperimentationSecond Edition, Productivity Press, Florence.

3.) Anderson, MJ, and PJ. Whitcomb. 2005.RSM Simplified: Optimizing Processes Using Response Surface Methods for Design of Experiments, Productivity Press, Florence.

You May Also Like